NLP for India

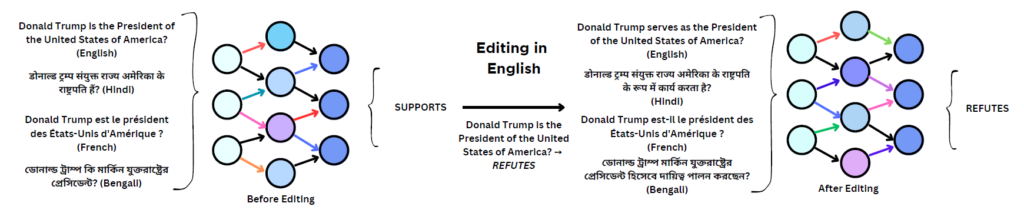

Cross-lingual Editing in Multilingual Language Models

We delve into the realm of cross-lingual model editing (XME), a novel paradigm aimed at enhancing the consistency and efficiency of multilingual NLP models. The core idea revolves around the ability to perform edits or updates in one language and automatically propagate these changes across other languages within the model’s framework. This cross-lingual editing process is not only a step towards more cohesive multilingual models but also addresses the challenges posed by language-specific nuances and variations. Our recent EACL 2024 work extends the standard English-FEVER dataset to 5 more languages (French, Spanish, Hindi, Bengali, and Gujarati), where we utilized MEND, KE, and FT based Model Editing Techniques (METs) for two different architectures: encoder-only (mBERT and XLM-RoBERTa) and decoder-only (BLOOM-560M) models.

Curating And Constructing Benchmarks And Development Of ML Models for Low-level NLP Tasks in Hindi-English Code-mixing

This project aims to create a benchmark and tools for understanding Hindi-English code-mixing, a prevalent yet under-researched phenomenon in Indic social media data. To achieve this, the project will develop a high-quality dataset specifically designed for identifying the language (Hindi or English) of individual words (tokens) in code-mixed text. This dataset will be created by proficient bilingual annotators, ensuring accurate language labels for each word. The project will also compare human annotations against existing automated tools to showcase the benefits of human expertise in providing nuanced and contextually relevant labels with greater accuracy and efficiency. By building this dataset and machine learning models, the project will aid researchers and startups working with large amounts of Hindi-English data. The project has applications in social good areas like hate speech detection and disaster response, and can bridge the gap between language research and policymakers. This project will not only lead to several publications in top-tier NLP conferences and journals, but will also help create a supportive environment for undergraduate and postgraduate students to develop scientific expertise in understanding the large volume of code-mixed text.